Introducing screen scraping, meaning a process where information displayed on a screen or user interface is collected and shared with another platform. Think of it as lifting content from a legacy green-screen onto a modern, well-defined dashboard that allows you to readily access the data.

Have you ever imagined how a budgeting app is able to read your bank balance or past transactions and display them on its own screen? Well, that seemingly sophisticated process is a classic example of screen scraping.

This guide will help you understand the basics of screen scraping, including how it works, especially in the banking context. It also explores legal and ethical considerations, breaks down the pros and cons, while also touches on security, Python options, proxies, and alternatives.

What is Screen Scraping?

Screen scraping is extracting data that’s rendered on a user interface. During this process, the scraper imitates regular user interaction with the platform, collects information from the display, and translates it to another application.

The sort of visual data extracted in screen scraping ranges from text to images on a web page, desktop app, mobile screen, or terminal. Most screen scraping happens during legacy modernization or last-mile integrations when no API exists.

Although many people confuse screen scraping with web scraping, the concepts are different. While web scraping extracts data by parsing the underlying HTML/JSON of a website, screen scraping simply captures the content on the screen.

What Data Does It Capture?

Scrapers are capable of extracting rendered content on a display interface, including text, tables, charts, and images. If text isn’t directly accessible from the screen, the scraper adopts optical character recognition (OCR) to convert pixel images into characters.

While the accuracy level may differ compared to directly reading native elements, OCR remains essential for canvases and scanned PDFs. Thankfully, with accessibility attributes like alt-text or ARIA, the fidelity and reliability of captured texts can improve.

When is It Chosen Over APIs?

The following are some triggers that sometimes warrant the application of screen scraping over APIs:

- No API exists

- The APIs are either undocumented, incomplete, or behind a paywall

- Partner systems do not reveal structured feeds

- Digital transformation pilots are time-boxed

It is important to remember that screen scraping is usually fragile and more expensive to maintain than APIs. However, it still holds the potential to deliver quick value when it’s the only option. Because APIs can be expensive or unavailable upfront, scraping can be more cost-efficient in the short term, while APIs are usually more cost-efficient at larger scales.

How Does Screen Scraping Work?

Screen scraping is carried out through a bot or software program that accesses the customer’s account, automates the customer’s regular interaction with the interface, and captures the screen data. Here’s a rundown of what the scraping pipeline looks like:

- The screen-scraping tool is authenticated like a real user.

- Next up, the bot navigates to the target screen or display.

- Locate elements or regions (web: selectors; desktop: accessibility trees; fallback: coordinates).

- The tool extracts text through native methods or OCR.

- Afterward, the text is normalized and exported to structured formats.

While browser automation relies on selectors, desktop automation utilizes accessibility trees or coordinates. However, because selectors are usually tightly coupled with the document object model (DOM) structure, they are quite brittle and can break when the layout or CSS changes. Alternatively, resilient strategies like anchors or hierarchical locators can reduce drift.

Native vs Full-Text vs OCR (UiPath)

In the context of screen scraping, UiPath is an RPA platform that automates workflows and can use AI features where useful. That said, there are three scraping modes available using UiPath:

- Native: This technique directly reads accessible text. It is fast and accurate.

- Full Text: Full Text captures text that a control exposes, even beyond visible areas.

- OCR: Here, image-based text, such as in scanned documents, is converted into plain, accessible text.

All three modes have their specific use cases, but as a recommended practice, you can test them all on tough-to-crack screens to determine which is production-safe.

Headed vs Headless Automation

One other consideration in the screen scraping process is the use of headless and headless browsers in automation. Headed automation runs on a traditional browser with a visual interface. Although slower, it mimics human usage and is often the best option for debugging.

In contrast, headless automation adopts a browser that lacks a user interface. It is often faster and CI-friendly. However, it can sometimes be blocked by anti-bot defenses.

For optimal results, toggling between headed and headless automation is advised during diagnostics.

Where is Screen Scraping Used in Banking and Open Banking?

Historically, personal finance management apps like budgeting tools asked users for their login credentials, copied information, and displayed it on their dashboards.

This sort of banking screen scraping worked when APIs didn’t exist. However, many regions now prefer open banking APIs since they provide structured data without sharing credentials. Open banking APIs also prioritize consent and are reliable. Nonetheless, scraping persists in instances where APIs are missing or incomplete.

What is Screen Scraping in Open Banking?

Open banking frameworks generally allow consumers to securely share their financial data with third-party apps through standardized APIs. When data is shared through an API under open banking rules, the data is structured with explicit permissions, thereby allowing for traceability.

By contrast, screen scraping accesses data by simulating a user login and reads pixels or DOM elements on the interface. Even a slight change in layout or UI can cause the integration to break without notice. Migration plans that phase out credential-sharing flows in favor of APIs are encouraged as they reduce technical fragility.

Policy & Industry Trendlines

Regulators and banks now favor standardized APIs over scraping, although many institutions allow some transitional scraping where APIs are unavailable. As a practical takeaway, treat scraping as a temporary technique. Make sure to always document user consent and minimize the scope of data captured to reduce both compliance risk and long-term maintenance costs.

Is Screen Scraping Legal?

The legality of screen scraping depends on:

- What you access. Scraping publicly available data is treated differently from extracting content with a consumer’s credentials behind a login screen. However, bypassing technical protections or rate limits may still violate platform ToS or anti-circumvention laws, even for otherwise public content.

- How you access. Using automation with explicit user permission has more legal backing than bypassing technical protections, which violate a platform’s ToS.

- Where you operate. Jurisdictions treat consumer data rights and privacy differently.

The above analysis is for informational purposes only and does not qualify as legal advice. If you plan to scrape sensitive data, consult qualified legal counsel.

Compliance-By-Design Checklist

Below is a quick checklist to help with compliance requirements:

- Obtain clear consent and explain the reason for data use.

- Maintain a preference for official APIs where available.

- Adopt respectful pacing. For public websites, respect robots.txt. For authenticated, desktop, mobile, or terminal targets, rely on applicable ToS, contracts, and explicit consent.

- Do not retain or store plaintext credentials.

- Keep an audit log of who accessed data and under what authorization.

- Conduct DPIA/PIA whenever personal data is involved.

Incorporating compliance processes early helps cut long-term costs and reduce incident risk.

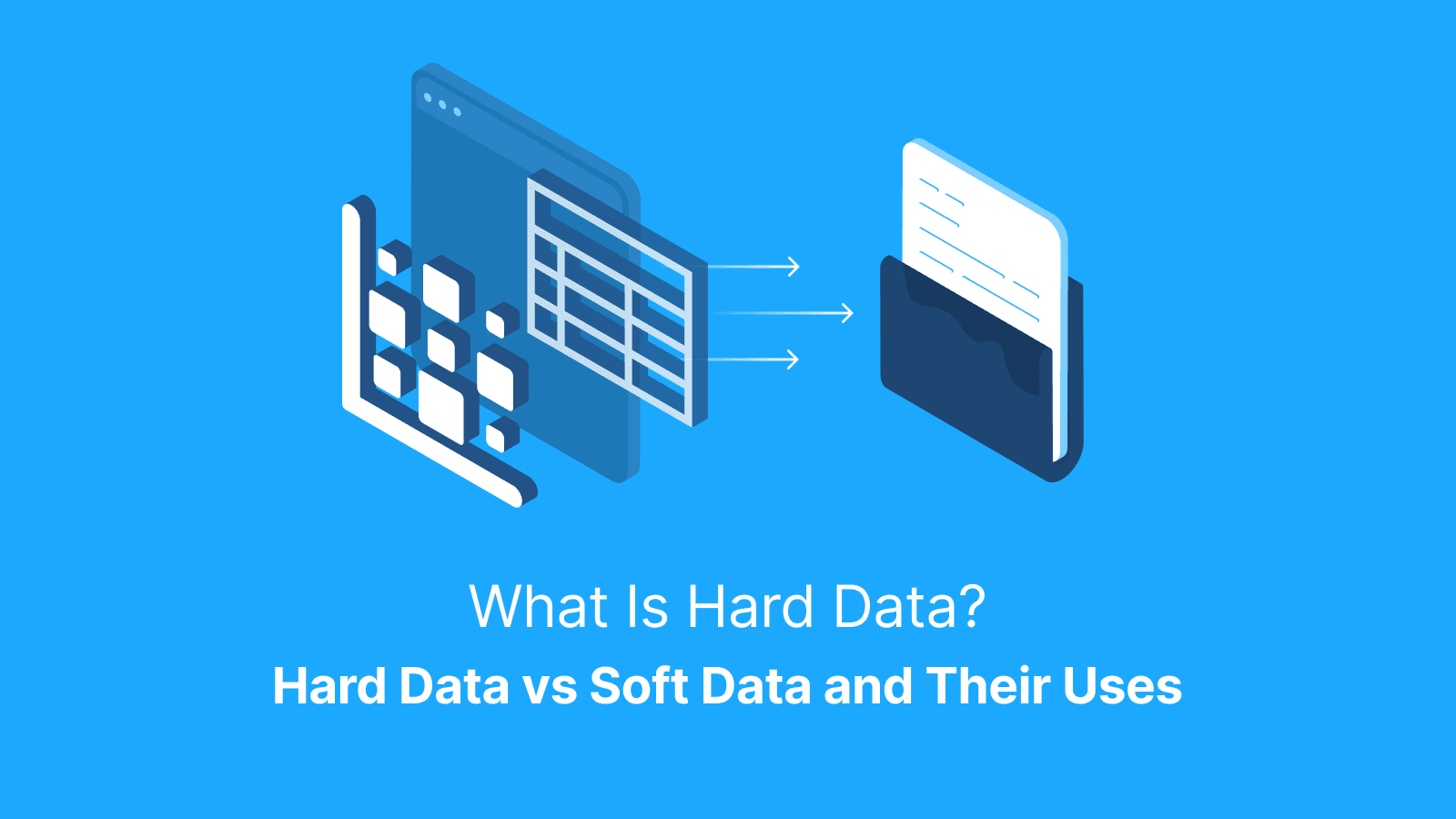

Screen Scraping vs Web Scraping vs APIs

Each of these data extraction methods varies in application and benefits.

- Screen scraping: This is the most brittle and expensive to maintain. However, in many cases, it may be the only option for legacy or closed systems. Also, in the short term, it may sometimes be more cost-efficient than APIs, particularly when APIs are costly or inaccessible.

- Web scraping: Parses page code or DOM and can be stable with maintenance but outcomes vary by system change rate.

- APIs: Delivers the most reliable path for data collection. Generally, APIs become the most cost-efficient path for data collection at larger scales, offering stability and long-term sustainability.

Here is a table that highlights the performance of each method across important metrics, with short-term vs long-term costs called out explicitly.

| Method | Fidelity | Stability | Governance | Cost (short-term) | Cost (long-term) |

|---|---|---|---|---|---|

| Screen scraping | Variable | Fragile | Complex | Low–Medium | High |

| Web scraping | Medium | Moderate | Medium | Medium | Medium |

| APIs | High | Stable | Strong | Medium–High | Low |

The smart approach is to use scraping as a temporary bridge when APIs are absent or costly upfront, while planning a migration to APIs for long-term stability and cost efficiency.

RPA Tie-in (UiPath)

Robotic process automation (RPA) platforms rely on screen scraping to automate legacy systems without APIs. This applies to desktop apps, mainframe terminals, and not just browsers. Since these automations interact with a fragile interface, stress maintainability is key. Important practices include introducing:

- Resilient selectors

- Anchors for dynamic UIs

- Timeouts/retries for reliability

At scale, orchestration tools like UiPath Orchestrator help you queue tasks and isolate errors so failures don’t surge.

Further reading: What is Web Scraping and How to Use It in 2025? and What Is Data Retrieval, How It Works, and What Happens During It?

Methods, Tools & Software to Know

Here is a quick look at the screen scraping landscape, including important methods and software:

- Automation suites like UiPath and Power Automate. They thrive in enterprise workflows, offering integrated governance, though licensing can be costly.

- Browser drivers such as Selenium, Playwright. While they are popular for scraping, running them in headless modes can produce different results compared to visible browsers.

- Desktop/vision libraries with examples like PyAutoGUI and Sikuli. These tools target on-screen regions and can be useful when there are no structured selectors. However, they are very sensitive to font and resolution changes.

- OCR engines like Tesseract, cloud OCR handle images and scanned documents, but their accuracy sometimes depends on font rendering and picture quality.

- SaaS tools that provide managed scraping feeds and offer flexibility, although at high subscription costs.

Across all options, the best approach is to start with a small proof of concept to validate resilience before scaling.

What is a Feature of the Native Screen-Scraping Method (UiPath)?

That’s pretty simple. Native usually targets visible/accessible controls (varies by app). Use Full Text for controls that expose text not on screen, and OCR for images/canvas.

Python Screen Scraping: Where to Start

Remember some of the recommended tools and software above? Well, in Python screen scraping, a number of those tools are blended for different needs.

- Playwright or Selenium to handle DOM-readable data in browsers.

- PyAutoGUI for true screen regions, such as desktop apps or canvases. Vision-based libraries may also apply here, as they provide pixel-level targeting

- OCR engines that are perfectly primed to process images and PDFs.

A pragmatic stack for a reliable workflow also includes:

- Request pacing and retry logic.

- Containerized workers for reproducibility.

- Writing parsers designed to tolerate minor visual or layout changes.

Bottom line: This kind of combination balances accuracy with maintainability while allowing you to scale on demand.

How Do Proxies Support Screen-Scraping Workflows (Ethically)?

Proxies do not change the legal status of scraping. They simply modify IP addresses or geolocation to reproduce user conditions for QA, localization checks, or unbiased content comparisons.

While not required in every workflow, high-quality proxies are critical for geo-replication, QA testing, and resilience at scale. High-quality proxies help avoid blocks and bans, maintain stable sessions, and allow location switching to simulate real users. In contrast, relying on free proxies during scraping results in constant blocking and incomplete or unusable data.

For public or consented tests, residential or mobile proxies like Live Proxies help avoid IP clustering and simulate different regions. On the other hand, sticky sessions maintain a persistent user state, while rotating IPs distributes load.

One of Live Proxies' main features is its exclusive allocation of IPs, ensuring that your private IP is not being used on the same target by a competitor. Also, compared to other options in the market, Live Proxies offers incredibly fast and stable proxies.

Always conduct testing with permission and respect site policies.

Rotating vs Sticky Sessions

Use rotating IPs when you need broad coverage or aim to avoid throttling. Sticky sessions are preferable for workflows that require a persistent state, like logins, multi-step wizards, or baskets. Sticky session duration varies by provider/config (ranging from minutes to hours). It is preferable to choose a duration that fits the workflow,

Your choice ought to depend on site behavior, rate limits, and risk tolerance. While rotation reduces load and detection risk, sticky sessions preserve session continuity for complex interactions.

Geo and Device Realism

Targeting specific cities, countries, or mobile carrier IPs allows screen scraping tools to capture localized content such as prices, taxes, or feature variations. To ensure reproducibility and auditability, record the geo and device context alongside information collected in your data lineage.

Further reading: What Are Proxies for Bots? Roles and How to Choose Proxies for Different Bot Tasks and What is an Anonymous Proxy: Definition, How It Works, Types & Benefits.

Pros, Limits, and Risks

Screen scraping has its benefits, limitations, and potential risks. Let’s explore them all in one fell swoop.

The perks of scraping are pretty clear:

- Provides access where APIs don’t exist.

- Enables quick pilots.

- Supports legacy system integration.

However, it comes with significant costs:

- Flows are fragile to UI changes.

- OCR can introduce errors.

- Credential handling carries risk.

- Vendor terms and EULAs may restrict use.

- Higher long-term maintenance.

A practical rule of thumb? Scraping may be cheaper to start with, but APIs win on long-term cost efficiency. Choose APIs first, web scraping second, and screen scraping only when necessary.

Banking-Specific Risks

Screen scraping in banking is not without its risks.

- Credential-sharing exposes users to fraud.

- There are multifactor authentication (MFA) challenges that complicate flows.

- Scrapers face potential liability if data is mishandled.

Wherever possible, use OAuth-style delegated flows to reduce exposure. Alongside, provide strong user education if credential-based scraping must continue during a migration to APIs.

Implementation Guide (Design → Build → Operate)

A pragmatic screen-scraping lifecycle starts and ends with the following processes:

- Define your scope by identifying target screens and obtaining consent.

- Capture a “golden path” recording of the workflow end-to-end.

- Select the appropriate extraction mode for each screen between Native, Full Text, and OCR.

- Build resilient selectors or anchors. Avoid hard-coded coordinates and use a robust locator.

- Implement retries and backoff while ensuring to handle transient failures gracefully.

- Include logging with screenshots for failures to ease troubleshooting later on.

- Validate results against a golden dataset to ensure extracted data matches the expected result

- Schedule regular maintenance windows, checking for UI changes.

- Document clear ownership and runbooks for long-term reliability.

Handling CAPTCHA & Anti-Automation

To steer clear of data scraping risks, minimize triggers by emulating human-like pacing when interacting with the UI. Cache data where possible and reduce unnecessary page reloads. Ultimately, prefer official API access rather than circumventing protections that may violate ToS or relevant laws.

Remember to also treat user-initiated challenges like CAPTCHA or MFA prompts as boundaries to respect rather than as obstacles to bypass.

Hardening OCR Pipelines

To improve OCR accuracy, normalize dots per inch (DPI) and font rendering. On top of that, use language dictionaries tailored to the content of spellchecks. Apply post-OCR validation, such as regex checks, checksums, or balancing totals, before persisting results. These steps will help catch errors early and ensure higher-quality, reliable data.

How Do You Scale and Monitor Screen Scrapers?

If you’re looking to scale your screen scraping operation, here are some proven steps to achieve that goal.

- Implement horizontal scale techniques with worker pools and queues.

- Design tasks to be idempotent.

- Maintain checkpointed sessions.

- Introduce rate-aware schedulers to respect target system limits.

- Define your Service Level Objectives (SLOs), including success rates, average and 95th percentile latencies, as well as alerts for UI changes.

- Deploy canary jobs to detect interface drift early.

- Adopt central dashboards with screenshots and diff comparisons to monitor performance and efficiently troubleshoot issues.

Observability & Alerting

For premium observability and alerting, here is a comprehensive and reliable four-pronged approach to follow.

- Maintain structured logs and track per-selector error counts to quickly identify problem areas.

- Use screenshot diffs and DOM/hash drift monitors to detect UI changes.

- Configure pager thresholds for critical failures.

- Conduct postmortems after incidents to refine selectors and improve the resilience of your scraping workflows over time.

Throughput & Cost Controls

You can optimize scraping workflows by parallelizing tasks per domain while respecting polite rate limits to avoid overloading your target systems. Moving forward, implement exponential backoff for retries and configure autoscaling rules to manage cloud resources efficiently.

This full-on approach balances throughput and reduces the risk of IP bans. Even better, it also helps keep operational costs under control.

What Platform-Specific Considerations Matter (Web, Desktop, Mobile, Terminal)?

Screen scraping differs across platforms. The table below gives you an overview of web, desktop, mobile, and terminal surfaces as well as specific considerations for each.

| Platform | Key Access Method | Specific considerations |

|---|---|---|

| Web | DOM, CSS/XPath, Canvas | Use robust CSS/XPath selectors and wait for elements to render. Try simulating realistic events for reliable input |

| Desktop | Win32/.NET accessibility trees | Prefer accessibility-first targeting and fallback to coordinates only if necessary. Input reliability improves with standardized automation libraries |

| Mobile | Android and iOS accessibility services | Use accessibility IDs and handle dynamic lists carefully. Test on real devices/device farms for accurate input timing |

| Terminal | 3270/5250 emulators | Use field-based or coordinate locators. Handle key sequences (including function/navigation keys) accurately |

Web & Canvas/Graphics

Modern web apps are known to create unique challenges when it comes to screen scraping. For instance, single-page applications (SPAs) often load content dynamically, while infinite scroll patterns are known to require handling content that appears on demand. Both techniques may affect scraping accuracy.

In the same way, Shadow DOM can hide elements from standard DOM queries, and canvas-only renders display information as images, thereby making OCR extraction necessary. Best practice is progressive enhancement. You can achieve this by preferring standard DOM access when possible and falling back to OCR techniques for content that cannot be accessed via automation.

Desktop & Virtual Desktops

There are special considerations for desktops and virtual desktops, too. For a start, accessibility-first targeting should be prioritized, using UI elements exposed via APIs for more reliable extraction. However, when such elements aren’t accessible, coordinate-based fallbacks can be used, even though they are sensitive to layout shifts.

On another hand, high-DPI scaling can distort visuals, affecting OCR accuracy, while remote sessions (RDP/VDI) introduce latency and potential image compression artifacts that further impact OCR fidelity. Accounting for these factors is essential to maintain reliable, accurate data capture in desktop and virtualized environments.

Mobile App Screens

When automating mobile app screens, here are some action points to keep in mind.

- Prioritize accessibility IDs to locate elements reliably.

- Handle dynamic lists carefully.

- Account for keyboard pop-ups that may obscure fields.

- Stay aware of app-only anti-automation checks that can block bots.

For realistic testing, use device farms to emulate a range of devices and OS versions. In turn, this will ensure your automation works across the environments your users actually encounter.

Terminal/Mainframe Screens

Screen scraping on 3270/5250 terminals relies on field-based extraction. This simply means data is identified by predefined fields rather than a visual layout. When these fields aren’t available, row/column targeting can locate content accurately.

Maintaining session keep-alive strategies is crucial to prevent timeouts and ensure that the automation can complete multi-step workflows reliably without interruption.

Data Quality, Normalization & Schema Mapping

Raw screen-scraped data often requires cleaning before use. This cleaning process involves a broad range of operations, including trimming whitespace, type casting values, deduplication, key reconciliation, and schema versioning.

For best results, implement acceptance rules to reject implausible values. In addition, automate reconciliation against a trusted ledger to ensure accuracy whenever possible.

Security and Secrets Management

There are a number of strict actions recommended for a secure screen scraping operation.

- Use least-privilege accounts.

- Store credentials in vaults with rotation policies.

- Handle MFA devices securely.

- Apply network allowlists.

- Ensure encryption in transit and at rest.

- Redact personally identifiable information (PII) in logs and screenshots.

- Maintain a clear separation of duties between bot operators and data consumers to minimize risk and enforce accountability.

Change Detection & Maintenance Playbook

You should consider using hashes or visual diffs, as these are proactive UI-change monitoring tools that can detect drift. In addition, run semantic selector tests in CI pipelines and schedule regular health checks. Another possible action is to implement a triage process to patch selectors quickly without risky hotfixes.

Recommended: Always keep a “break glass” rollback to previous stable flows, as this will ensure rapid recovery if automation breaks.

Testing and QA Strategy

Here are some suggested tips for ensuring screen scraping reliability and testing integrity.

- Maintain golden datasets for regression testing.

- Create unit tests for parsers.

- Run integration tests on staging UIs.

- Use synthetic monitors in production.

- Perform A/B validation against API data when available.

- Capture before-and-after artifacts to accelerate root cause analysis.

Alternatives to Screen Scraping

Interested in alternative forms of accurate data extraction without screen scraping software? Below are some of the most trusted options.

- Official APIs: This alternative reduces legal exposure through consent approvals and standardized access. It is also known to lower maintenance costs by avoiding UI drift and ensuring high-quality, up-to-date data.

- Bulk exports/reports: Using this option avoids credential handling and simplifies your integration by parsing static files. With bulk exports, you also get complete and consistent datasets

- Partner aggregators or clearing houses: You can trust partner aggregators to handle compliance and unify multiple sources. Ultimately, this improves reliability and reduces any integration complexities.

- Webhooks: Known to deliver event-driven, consented updates, webhooks also minimize stale data and infrastructure overhead.

Each of these methods not only lowers legal exposure, but they also cut maintenance costs and improve data quality. In closing, implement a phased migration roadmap that will gradually replace scraping with these durable methods.

Conclusion

Screen scraping is simply the automation of copying what users see on the screen. Despite flaws like fragility and compliance risks, screen scraping remains an indispensable tool when APIs don’t exist. Besides, because APIs can be expensive or unavailable upfront, screen scraping often provides a more cost-efficient short-term bridge. However, APIs generally prove more cost-efficient and sustainable at larger scales.

Interestingly, banking is shifting toward open-banking APIs, but scraping persists where APIs are unavailable or incomplete. As a case in point, UiPath and Python libraries offer multiple capture methods. Similarly, utilizing proxies such as Live Proxies helps support ethical QA testing, even though they don’t alter legal obligations.

Going forward, adopt a sustainable approach that prioritizes APIs and builds compliance-by-design. All in all, use screen scraping only as a temporary bridge and not a long-term solution.

FAQs

What is screen scraping in banking?

Screen scraping in the banking context is the automated extraction of balances and transactions from a platform’s display using credential-based logins. The process simulates everyday user interactions and is often used when APIs are not available. Unlike consented open-banking APIs, it carries higher compliance risks, but is now being phased out in favor of APIs.

Is screen scraping legal?

The legality of screen scraping depends on a couple of factors, such as whether the content is publicly available or authenticated behind a login. Other considerations, such as adherence to ToS and the local jurisdiction, determine legality. You should seek legal counsel before extracting sensitive data and generally avoid bypassing access controls. For regulated data, it is also helpful to maintain audit trails and minimize data collection.

What is a feature of the native screen-scraping method in UiPath?

Native screen scraping reads visible text directly from the UI and optimizes for speed and accuracy. As earlier highlighted, it only works on visible text elements. So, you may want to use Full Text or OCR for images or canvases.

Python screen scraping vs web scraping—what’s the difference?

Python screen scraping captures pixels or regions using vision libraries and OCR. On the flip side, web scraping parses DOM/HTML with tools like Playwright or Selenium. The former is suitable for desktop apps while the latter works best with structured web data. Some common pitfalls to both scraping methods are anti-automation measures and canvas-only rendering.

What are the best screen-scraping tools?

For desktop and terminal automation, the best tools are RPA suites like UiPath or Power Automate. Playwright and Selenium are highly recommended browser drivers for web automation. Similarly, some of the best OCR engines for scanned documents are Tesseract and cloud OCR. Finally, SaaS apps are another set of proven screen scraping tools that manage and provide infrastructure for scraping.

Do proxies make screen scraping legal?

No, proxies do not make screen scraping legal. Instead, they only change IP or geolocation and are useful for QA and load distribution. Still, proxies are essential for scraping as high-quality proxies prevent bans and failed sessions. That said, always test with permission and adhere to rate limits. For public websites, follow robots.txt for authenticated/desktop targets, follow applicable ToS, contracts, and consent policies.

How do I stop scrapers from breaking when a page changes?

To stop screen scraping software from breaking, use resilient selectors and anchors. Additionally, implement visual/DOM hash monitoring while also introducing canary jobs and retry/backoff strategies. Finally, maintain a golden dataset and a rapid patch playbook to validate fixes quickly.

Can screen scraping handle PDFs or images?

Yes, it can, especially via OCR pipelines with DPI normalization, language dictionaries, and post-OCR validation (regex, checksums). However, whenever possible, prefer native exports (CSV/XML) or API endpoints for higher fidelity and reliability.